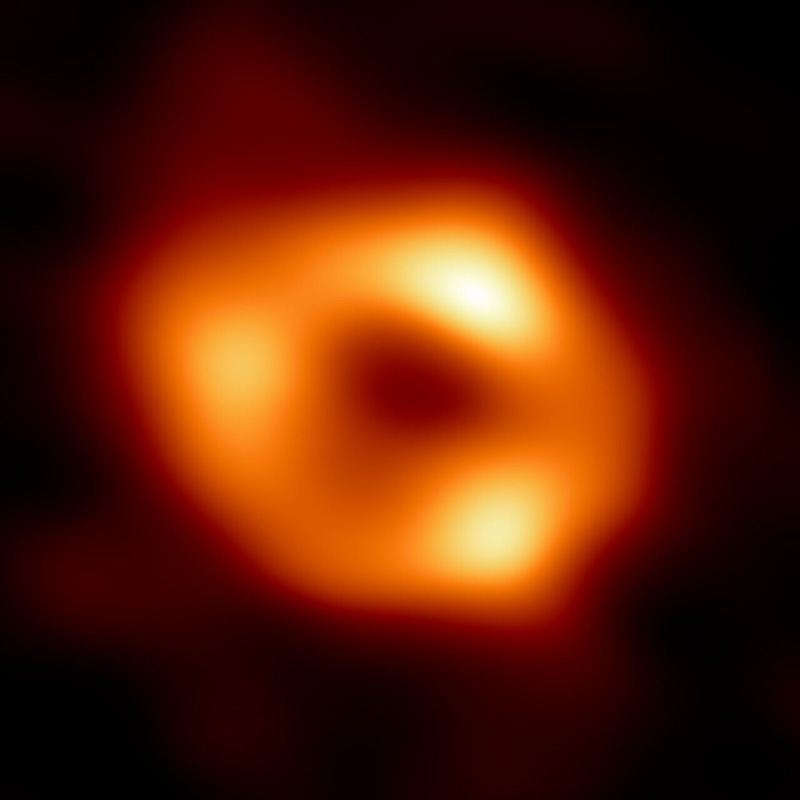

Last week we saw The first image of a black hole at the center of the Milky Waywhich is an incredible result Supercomputers played an essential role. More than 300 researchers from 80 institutes around the world have relied on Fronteraa supercomputer Hosted at the Texas Advanced Computing Center (TACC).

The massive amount of data collected by the Collaboration Event Horizon Telescope (EHT) had to be compared to what was being called the “largest black hole simulation library ever”, which is a series of proprietary models. The known physics of black holes.

“We have Produce countless simulations and compare them with data. The result is that we have a number of models that explain nearly all of the data, said Charles Jami, a researcher at the University of Illinois at Urbana-Champaign. “It’s great because it explains not just the Event Horizon data, but the data from other tools. It’s a victory for computational physics.”

Frontera did most of the simulations: as revealed HPCwirethe final analyzes require the equivalent of 80 million CPU hours, plus another 20 million hours Implemented by the National Science Foundation (NSF) Open Science Grid. The latter takes advantage of unused CPU cycles on a network, similar to distributed computing like BOINC.

Frontera is today The 13th largest supercomputer in the worldcapable of touching 23.5 petaflops in LinPack thanks to more than 448 thousand cores from approximately 16,000 Intel Xeon Platinum 8280 processors (Cascade Lake CPU with 28 cores each).

At the moment, it is not clear what is meant in this specific case by “CPU-time”, that is, whether the computation is performed by the entire CPU or if it is performed by a single core inside the chips. In the latter case, it would be approximately 8 days of uninterrupted computation by the Frontera supercomputer, to which the required computations in the Open Science Grid must be added for a total of 10-15 real computation days. If a single-core CPU was used, 100 million CPU hours would equate to more than 11,400 million computing years.

“Internet trailblazer. Travelaholic. Passionate social media evangelist. Tv advocate.”

More Stories

Long tenures for general managers

NASA's Psyche space probe communicates via laser with Earth from a distance of 226 million kilometers

A possible explanation for one of cosmology's greatest mysteries has arrived